Structured mark up is crucial for e-commerce websites if they want to stand out in the SERPs. Because e-commerce sites are generally set up to scale, scraping all of their information is very easy. All it takes is a Screaming Frog crawl and Outwit Hub.

For dropshippers and affiliate sites, harvesting competitor data within schema mark up tags can be extremely useful. If you are selling the same products as your competitors, you can compare pricing, product descriptions, calls to action/special promotions – anything – and analyze how you stack up against your competitors.

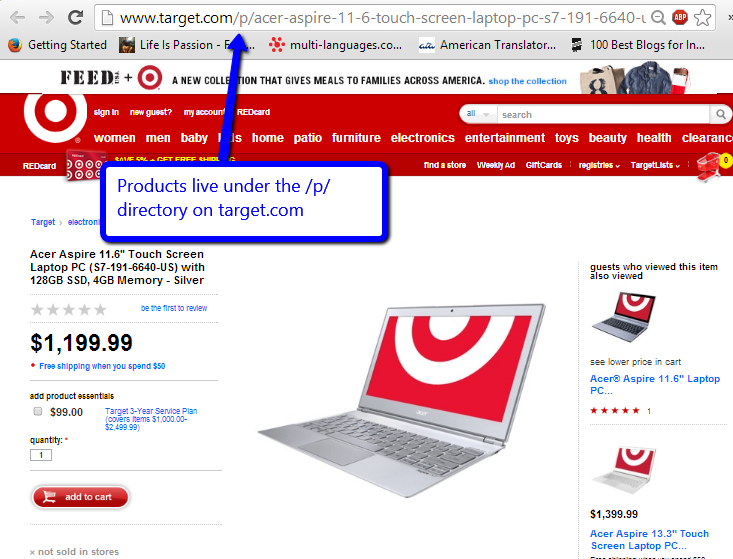

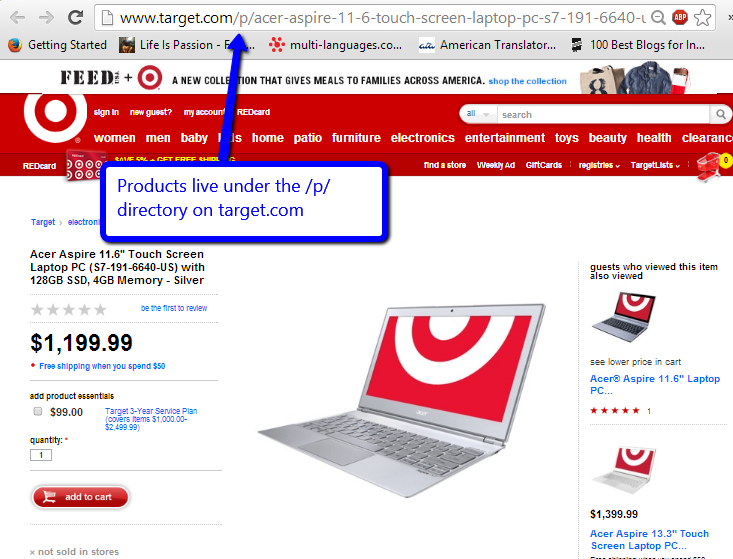

Before we can start, we need to figure out where products live on the competitor site. If your competitor has clearly built out information architecture, it shouldn’t be too tough. On Target.com, they use the directory /p/ for their products.

Step 1) Crawl and Collect Product Pages

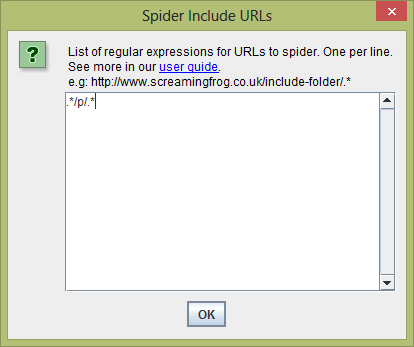

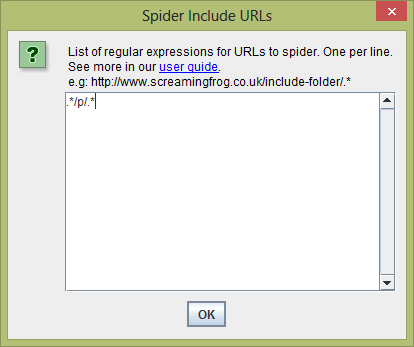

In order to get the pages that live under the /p/ directory, fire up Screaming Frog and under Configuration > Include, add .*/p/.*

Now your Screaming Frog export will only include product pages

So everyone can follow along and work with the same data, I’ve gone ahead and scraped all the laptops that are currently listed on the Target.com site, which you can get here:

List of Target Laptops (09/10/2013)

Step 2) Analyze Structured Markup and On Page Elements

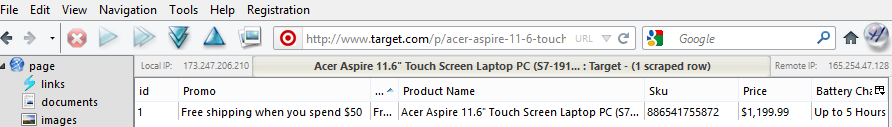

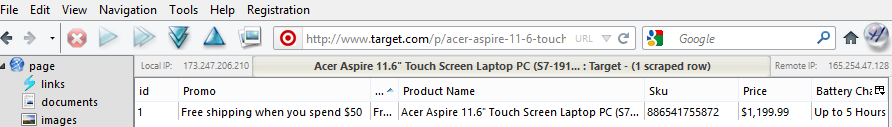

Take one of the product pages from your Screaming Frog Export, for this example, we’ll use the Acer Aspire 11.6″ Touch Screen Laptop PC page. If you enter the URL into the Rich Snippet Testing Tool you can see that Target is using a ton of structured markup on their product pages.

For this, exercise, we’re going to scrape:

- Price

- SKU

- Product Name

- Battery Charge Life (non-schema element)

- Call to action/Promotion (non-schema element)

Step 3) Fire up OutWit Hub

Outwit Hub is a desktop scraper/data harvester. It costs $ 60 a year and is well worth it. Outwit can utilize cookies, so scraping behind a pay-wall or password protected site is a non-issue. Instead of having to use Xpath to scrape data, Outwit Hub lets you highlight the source code and set markers to scrape everything that lies in between. If you are not a technical marketer, and you find yourself having to collect a lot of data/wasting your time – this is a good tool to have in your arsenal.

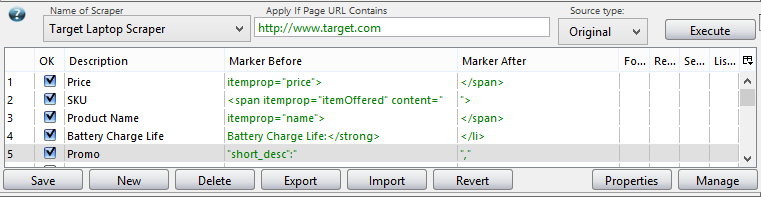

Step 4) Build Your Scraper

This may be intimidating at first, but it’s so much more scalable then trying to use Excel or Google Docs to scrape 1000s of data points

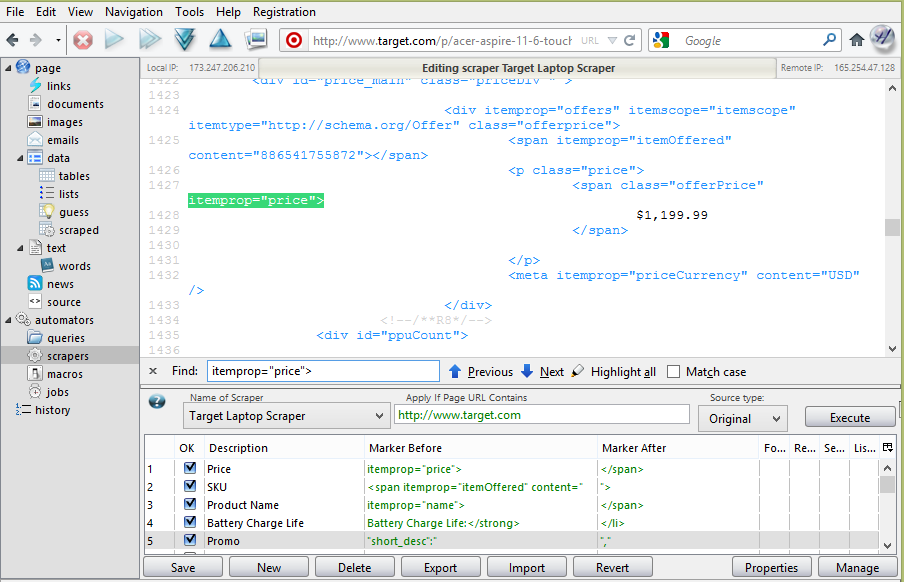

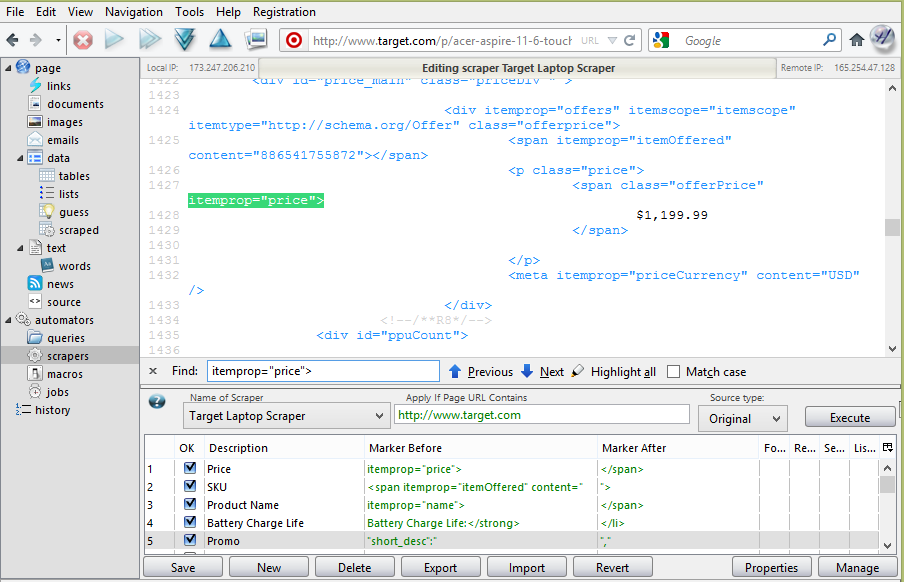

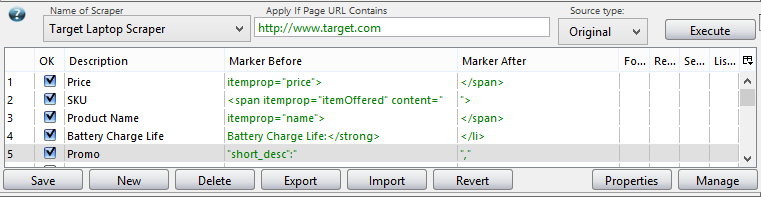

In the right-hand menu, click on Scrapers. Enter the example Target URL. This will load the source code.

Click on the “New” Button on the lower portion of the screen and name your scraper. I’m calling mine, “Target Laptop Scraper.”

In the search box, start entering in the markup for the schema tags you want to scrape for. Remember this isn’t Xpath, you don’t need to worry about the DOM, you only need to figure out what unique source code goes before the element (the schema tag) and what’s after it.

Extreme Close Up!

It will take some practice at first, but once you get the hang of it, it will only take a few minutes to set up a custom scraper.

Step 5) Test Your Scraper

Once you’re done entering in the markers for the data you want to collect, hit the execute button and test your results. You should see something like this:

Step 6) Put the list of URLs into a .txt file and save it.

Any of these storage devices or your local machine will do

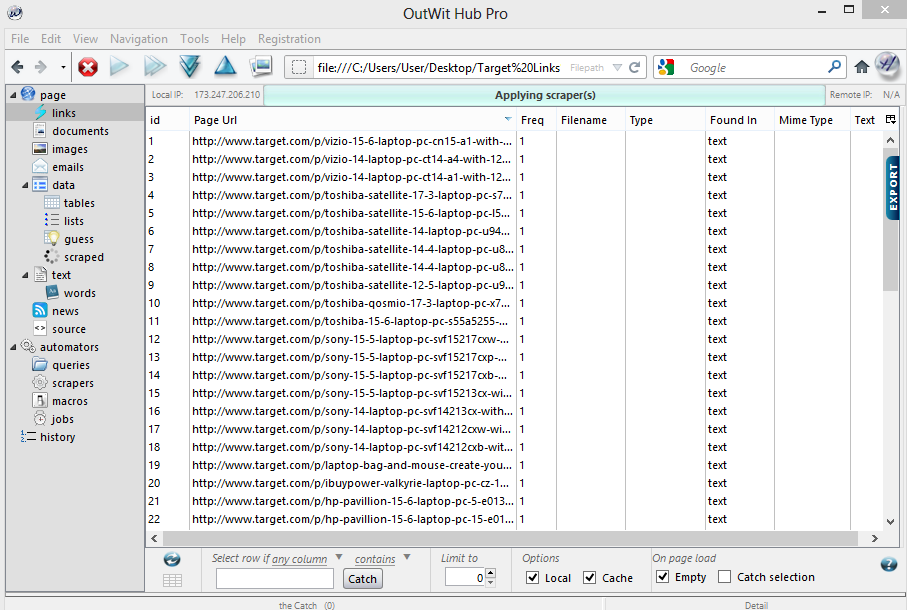

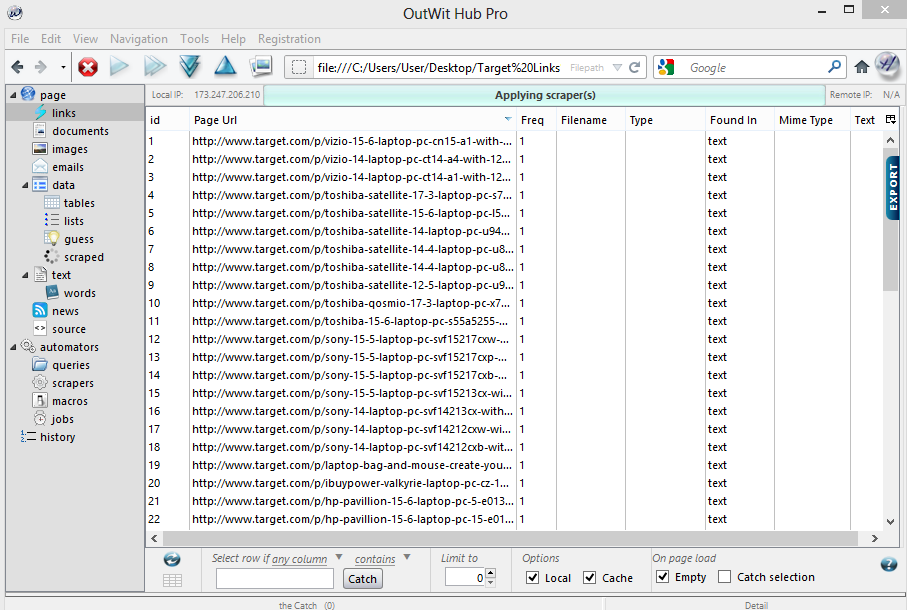

Step 7) Open the .txt file in Outwit using the file menu

If you go to the left navigation, just under the main directory, there is a subdirectory called “Links.” Click on Links in the left-hand nav. This is what you should see:

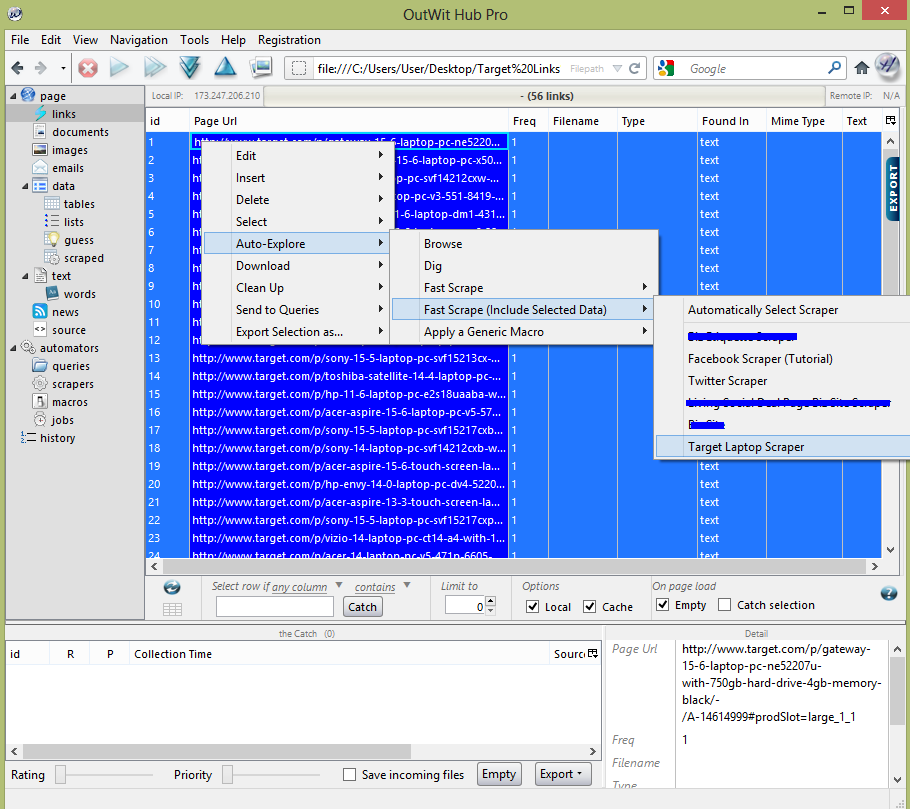

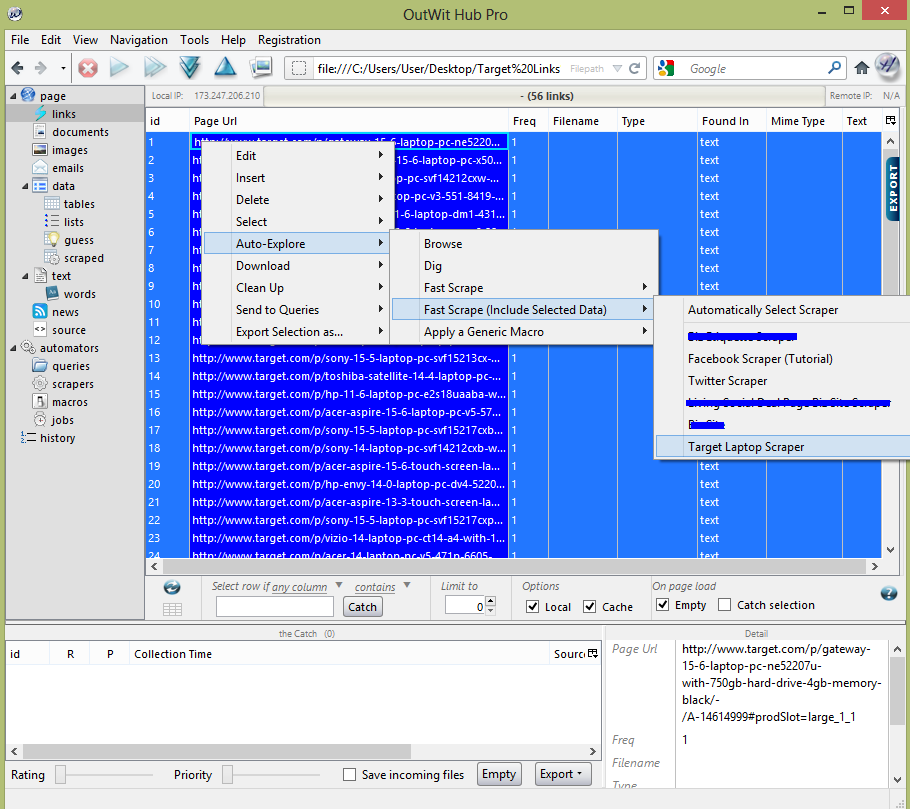

Select all the data using Control+A and then right click on the row with all the URLs.

Step 7) Fast Scrape!

In the right click menu, select: Auto-Explore >Fast Scrape (Include Selected Data) > And select the scraper we just built together.

Here’s a video of the last step in Outwit

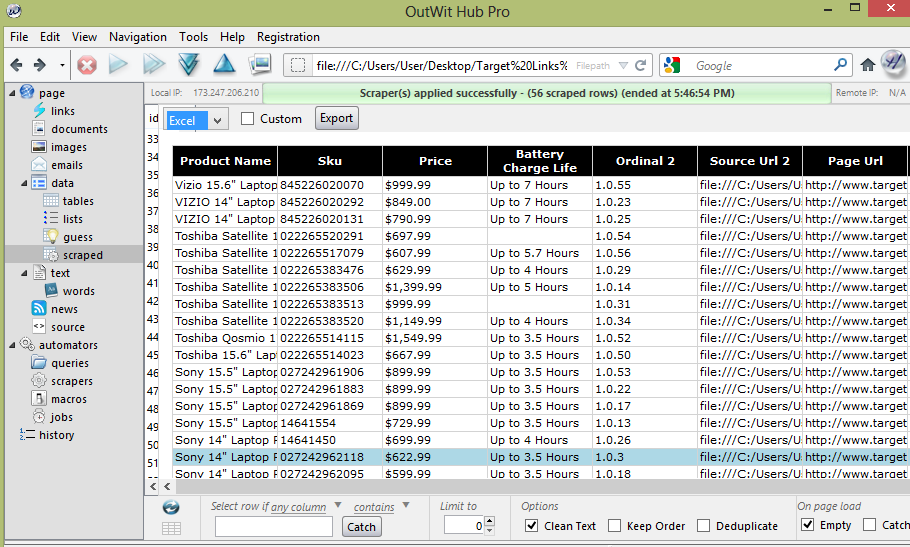

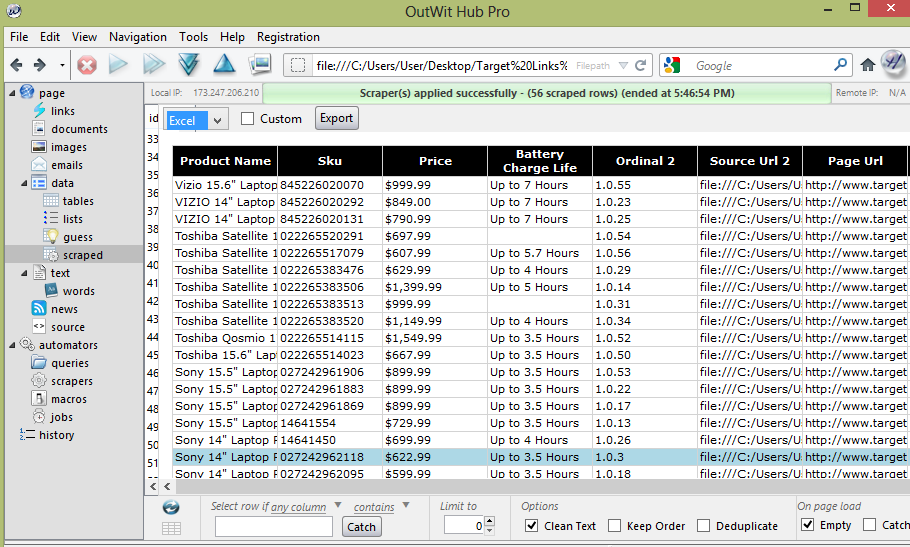

Step 8) Bask in the glory of your competitor’s data

In the left-hand navigation, there is a category called “data”, with the subcategory “scraped” – just in case you navigated away from it, that’s where all your data will be stored, just be careful not to load a new URL in Outwit Hub or else it will be written over and you will have to scrape all over again.

You can export your data into HTML, TXT, CSV, SQL or Excel. I generally just go for an Excel export and do a VLOOKUP to combine the data with the original Screaming Frog crawl from step one in Excel.

Got any fun potential use cases?

Share them below in the comments!

Image source via Flickr user avargado

The post Scraping Schema Markup for Competitive Intelligence appeared first on SEOgadget.

SEOgadget

![]()